As I have been working on the relationships between economics and ethics for a couple of years now, I have had several times the opportunity to reflect on the way scholars are producing knowledge on ethical issues. In a former blog, I was already contemplating the role played by moral intuitions in Derek Parfit’s moral reasoning on population ethics issues. As I am now reading Parfit’s huge masterpiece On What Matters and Lazari-Radek and Singer’s book The Point of View of the Universe, this issue once again is brought to my attention as both Parfit and Lazari-Radek and Singer explicitly tackle it.

My current readings have led me to somehow revise my view on this issue. Comparing the way economists (especially social choice theorists) and philosophers deal with ethical problems, I have been used to make a distinction between what can be called an ‘axiomatic approach’ and a ‘constructivist approach’ to ethical problems. The former tackles ethical issues first by identifying basic principles (‘axioms’) which are thought as requirements that any moral doctrine or proposition must satisfy and then by determining (most often through logical reasoning) implications regarding what is morally necessary, possible, permissible, forbidden and so on. The latter deals with ethical issues through thought experiments which most often consist in more or less artificial decision problems. There is an abundance of examples in philosophy: from Rawls’s ‘veil of ignorance’ to Parfit’s various spectrum and teleportation thought experiments and variants of the so-called ‘trolley problem’, philosophers routinely construct decision problems to determine what is intuitively regarded as morally permitted, mandatory or forbidden. A good example of these two approaches is provided by John Harsanyi’s two utilitarian theorems: his ‘impartial observer’ theorem and his ‘aggregation theorem’. The former corresponds a constructivist approach and builds on a thought experiment using the veil of ignorance device. Harsanyi asked which society a rational agent put under a thin veil of ignorance would choose to live in. Behind such a veil, the agent would ignore both his social position and his personal identity, including his personal preferences. Harsanyi famously argued that under this veil, rational agent should ascribe the same probability of being any member of the population and should therefore choose the society that maximizes the average expected utility of the ‘impartial observer’. Harsanyi’s aggregation theorem also provides a defense of utilitarianism but in quite a different way. It shows that if the members of the population have preferences over prospects (i.e. societies) that satisfy the axioms of expected utility theory, if a ‘benevolent dictator’ also has preferences satisfying these axioms, and if the relationship between both sets of preferences satisfies a Pareto condition, then the benevolent dictator’s preferences can be represented by a unweighted additive social welfare function.

Moral philosophers generally refer to another distinction that I originally thought was essentially equivalent to the axiomatic/constructivist one. Considering how moral claims can be justified, moral philosophers divide between ‘foundationalists’ and ‘intuitionists’. Intuitionism is grounded on the method that Rawls labeled ‘reflective equilibrium’. Basically, it consists in considering that our moral intuitions provide both the starting point of moral reasoning and are the ultimate datum against which moral claims should be evaluated. Starting from such intuitions, moral reasoning will lead to claims that may contradict our initial intuitions. Intuitions and moral reasoning are then both iteratively revised until they ultimately match. Foundationalism proceeds in a quite different way. Here, moral claims are defended and accepted on the basis of basic, self-evident principles from which moral implications are deduced. Parfit’s discussion of issues related to personal identity or Larry Temkin’s critique of the transitivity principle in moral reasoning are instances of accounts that proceed along intuitionist lines. By contrast, Sidgwick’s defense of utilitarianism was rather foundationalist in essence, as it depended on a set of ‘axioms’ (justice, rational benevolence, prudence) from which utilitarian conclusions were derived.

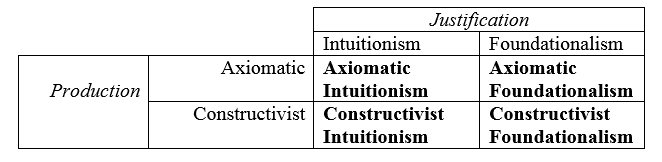

There is an apparent affinity between the axiomatic approach and foundationalism on the one hand, and between the constructivist approach and intuitionism on the other hand. Until recently, I have considered that the former pair was essentially characteristic of the way normative economists and social choice theorists were tackling ethical issues, while the latter was rather consistent with the way philosophers were proceeding. However, I realize that if this affinity is indeed real, this cannot be due to the mere fact that the axiomatic/constructivist and intuitionist/foundationalist distinctions are isomorphic. Indeed, it now seems to me that they do not concern the same aspect of moral reasoning: the former distinction concerns the issue of how ethical knowledge is produced, the latter concerns the issue of how moral claims are justified. While production and justification are somehow related, they still are quite different things. Therefore, there is no a priori reason for rejecting the possibility of combining foundationalism with constructivism and (perhaps less obviously) intuitionism with the axiomatic approach. We would then have the following four possibilities:

I think that ‘Axiomatic Foundationalism’ and ‘Constructivist Intuitionism’ are unproblematic categories. Examples of the former are Harsanyi’s aggregation theorem, John Broome’s utilitarian account based on separability assumptions or, at least as understood initially, Arrow’s impossibility theorem. All build on an axiomatic approach to derive moral/ethical/ social choice results taking the form either of necessity claims (Harsanyi, Broome) or impossibility claims (Arrow). Moreover, these examples are precisely interesting because they lead to essentially counterintuitive results and have been argued by their proponents to require us to give up our original intuitions. Examples of ‘Constructivist Intuitionism’ are abundant in moral philosophy. As mentioned above, Temkin’s claims against transitivity and aggregation and Parfit’s reductionist account of personhood are great examples of a constructivist approach. They build on a thought experiments about a decision problem and essentially ask us to consider what is the solution that is consistent with our intuitions. These are also instances of intuitionism because, though intuitions are fueling moral reasoning from the start, the possibility is left to reconsider them (at least in principle).

Harsanyi’s impartial observer theorem is an instance of ‘Constructivist Foundationalism’. Harsanyi’s use of the veil of ignorance device makes it corresponding to a constructivist approach. At the same time, Harsanyi also assumes that choosing in accordance with the criteria of expected utility theory should be taken as a foundational assumption of moral reasoning. This is the combination of this foundational assumption with the construction of a highly artificial decision problem that leads to the utilitarian conclusion. Finally, we can wonder if there really are cases of ‘Axiomatic Intuitionism’. I would suggest that Sen’s Paretian liberal paradox may be interpreted this way. Admittedly, the Paretian liberal paradox could also be seen as a case of Axiomatic Foundationalism as Sen’s initial intention was to lead economists to reconsider their intuitions regarding the consistency of freedom and efficiency. However, the discussion that has followed Sen’s result, rather than endorsing the claim of the inconsistency between freedom and efficiency, has focused on redefining the way freedom was axiomatically defined by Sen in such a way that the initial intuition was preserved. It remains true that the contrast between Axiomatic Foundationalism and Axiomatic Intuitionism is not that sharp. This probably reflects the fact that, as more and more moral philosophers are recognizing, the distinction between intuitionism and foundationalism has been historically exaggerated. However, I would suggest that the constructivist/axiomatic distinction is a more solid and transparent one.