[Update: As I suspected, the original computations were false. This has been corrected with a new and more straightforward result!]

For some reasons, I have been thinking about the famous Newcomb’s paradox and I came with a “solution” which I am unable to see if it has been proposed in the vast literature on the topic. The basic idea is that a consistent Bayesian decision-maker should have a subjective belief over the nature of the “Oracle” that, in the original statement of the paradox, is deemed to predict perfectly your choice of taking either one or two boxes. In particular, one has to set a probability regarding the event that the Oracle is truly omniscient, i.e. he is able to foreseen your choice. Another, more philosophical way to state the problem is for the decision-maker to decide over a probability that Determinism is true (i.e. the Oracle is omniscient) or that the Free Will hypothesis is true (i.e. the Oracle cannot predict your choice).

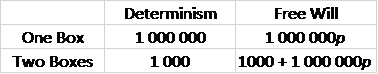

Consider the following table depicting the decision problem corresponding to Newcomb’s paradox:

Here, p denotes the probability that the Oracle will guess that you will pick One Box (and thus put 1 000 000$ in the opaque box), under the assumption that the Free Will hypothesis is true. Of course, as it is traditionally stated, the Newcomb’s paradox normally implies that p is a conditional probability (p = 1 if you choose One Box, p = 0 if you choose two boxes), but this is the case only in the event that Determinism is true. If the Free Will hypothesis is true, then p is an unconditional probability as argued by causal decision theorists.

Denote s the probability for the event “Determinism” and 1-s the resulting probability for the event “Free Will”. It is rational for the Bayesian decision-maker to choose One Box if his expected gain for taking one box g(1B) is higher than his expected gain for taking two boxes g(2B), hence if

s > 1/1000.

Interestingly, One Box is the correct choice even if one puts a very small probability on Determinism being the correct hypothesis. Note that is independent of the value of p. If one has observed a sufficient number of trials where the Oracle has made the correct guess, then one has strong reasons to choose One Box, even if he endorses causal decision theory!

Now consider the less-known “Meta-newcomb’s paradox” proposed by philosopher Nick Bostrom. Bostrom introduces the paradox in the following way:

There are two boxes in front of you and you are asked to choose between taking only box B or taking both box A and box B. Box A contains $ 1,000. Box B will contain either nothing or $ 1,000,000. What B will contain is (or will be) determined by Predictor, who has an excellent track record of predicting your choices. There are two possibilities. Either Predictor has already made his move by predicting your choice and putting a million dollars in B iff he predicted that you will take only B (like in the standard Newcomb problem); or else Predictor has not yet made his move but will wait and observe what box you choose and then put a million dollars in B iff you take only B. In cases like this, Predictor makes his move before the subject roughly half of the time. However, there is a Metapredictor, who has an excellent track record of predicting Predictor’s choices as well as your own. You know all this. Metapredictor informs you of the following truth functional: Either you choose A and B, and Predictor will make his move after you make your choice; or else you choose only B, and Predictor has already made his choice. Now, what do you choose?

Bostrom argues that this lead to a conundrum to the causal decision theorist:

If you think you will choose two boxes then you have reason to think that your choice will causally influence what’s in the boxes, and hence that you ought to take only one box. But if you think you will take only one box then you should think that your choice will not affect the contents, and thus you would be led back to the decision to take both boxes; and so on ad infinitum.

The point is that here if you believe the “Meta-oracle”, by choosing Two Boxes you then have good reasons to think that your choice will causally influence the “guess” of the Oracle (he will not put 1000 000$ in the opaque box) and therefore, by causal decision theory, you have to choose One Box. However, if you believe the “Meta-Oracle”, by choosing One Box you have good reasons to think that your choice will not causally influence the guess of the Oracle. In this case, causal decision theory recommends you to choose Two Boxes, as in the standard Newcomb’s paradox.

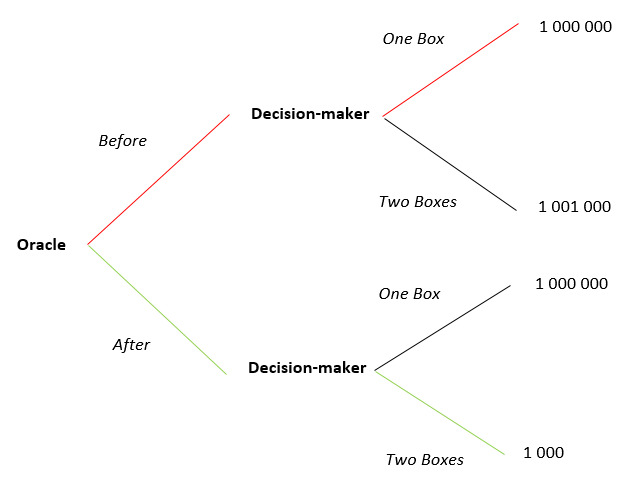

The above reasoning seems to work also for the Meta-Newcomb paradox even though the computations are slightly more complicated. The following tree represents the decision problem if the Determinism hypothesis is true:

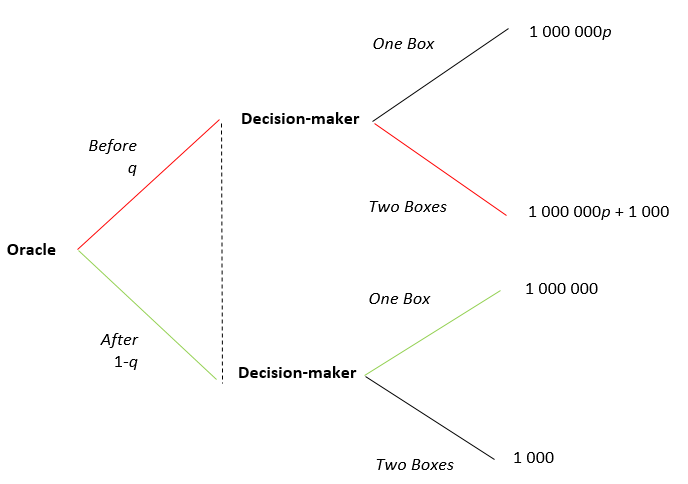

Here, “Before” and “After” denote the events where the Oracle predicts and observes your choice respectively. The green path and the red path in the three correspond to the truth functional stated by the Meta-oracle. The second tree depicts the decision problem if the Free Will hypothesis is true.

It is similar to the first one except for small but important differences: in the case the Oracle predicts your choice (he makes his guess before you choose) your payoff depends on the (subjective) probability p that he makes the right guess; moreover, the Oracle is now an authentic player in an imperfect information game with q the decision-maker’s belief over whether the Oracle has already made his choice or not (note that if Determinism is true, q is irrelevant exactly for the same reason than probability p in Newcomb’s paradox). Here, the green and red paths depict the decision-maker best responses.

Assume in the latter case that q = ½ as suggested in Bostrom’s statement of the problem. Denote s the probability that Determinism is true and thus that the Meta-oracle as well as the Oracle are omniscient. I will spare you the computations but (if I have not made mistakes) it can be shown that it is optimal for the Bayesian decision maker to choose One Box whenever s ≥ 0. Without fixing q, we have s > 1-(999/1000q). Therefore, even if you are a causal decision theorist and you believe strongly in Free Will, you should play as if you believe in Determinism!

C est dommage je ne comprend l’anglais

LikeLike